Thoughts on Multi-user VR Development using Unity and Aframe/WebVR

I have been posting on Twitter a few times concerning my attempts at building a multi-device multi-user framework using both Unity and WebVR/Aframe. Specifically there was some interest in sharing some thoughts on what it is like to develop VR applications using Unity versus something like Aframe (WebVR).

I discuss these in this post though I should note that I am hardly a technical developer. I have always found programming a means to a beautiful UX and art ends; and spend much of time exploring the many different affordances of various social technologies rather than focusing expertly into one area …

Thoughts on Unity

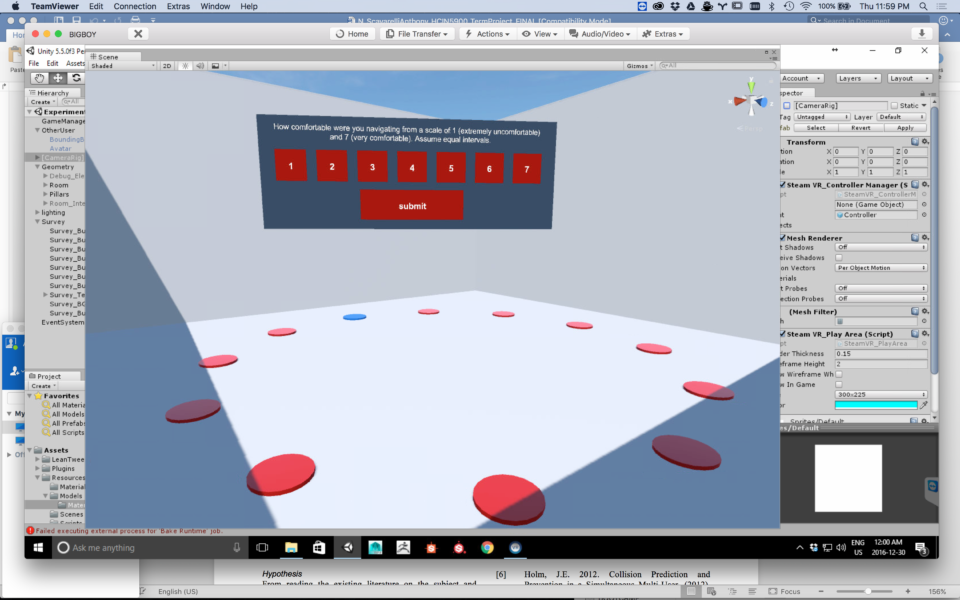

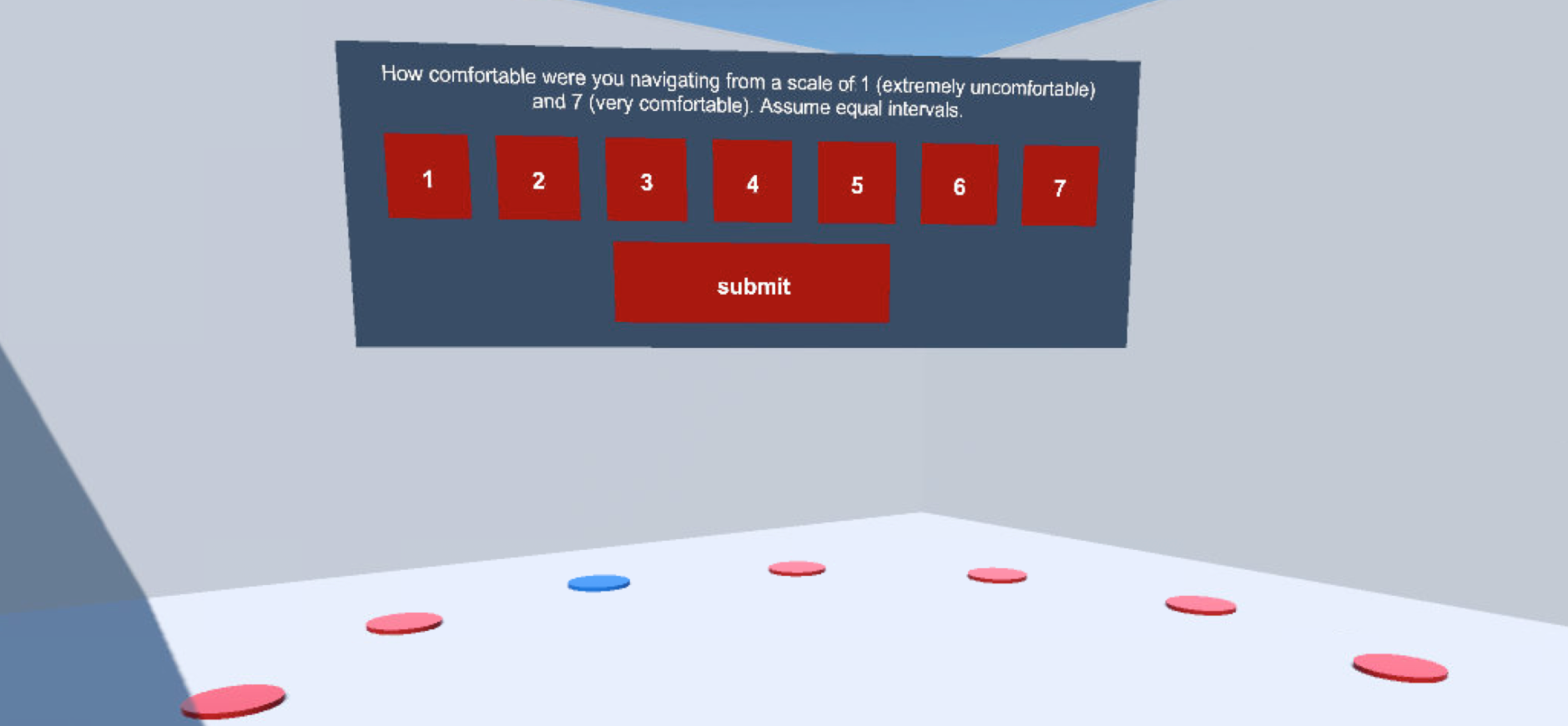

When I did my first VR experiments testing what kinds of visualizations could help aid us from colliding into each in shared VR spaces (something that I feel is inevitable as the technology becomes more ubiquitous) I used Unity as it just seemed obvious to me that something that compiled native applications like Unity or Unreal would be required due to the large performance requirements of rendering VR content.

For an experiment it was crucial that I was not wrestling with performance issues as they could introduce convoluted variables into the studies. I wanted to focus on the human-computer interactions, or in this case specifically, what visualization method would users prefer for avoiding others in a virtual space.

These are some of the pros and cons I have found when developing VR content (specifically multi-user VR content) in Unity:

- Unity Pros

- Well developed IDE

- Large and established community

- Asset store (with reviews helping to establish what works / what doesn’t)

- Stackoverflow and community forums are full of answers to just about any question you may have.

- Plug-ins like the SteamVR plugin are very helpful and straightforward

- Others like the “ignored” Lab Renderer plug-in also are but with a few caveats where you have to fix some shader code yourself

- Strongly typed languages like C# much easier to structure and by default synchronous so no strange threading issues when loading in content.

- Unity Cons

- Requiring a HMD to test VR content

- Requiring multiple machines and multiple VR equipment to test multiple users

- Long build times

- passing around a huge .exe to clients

- Passing to someone (e.g. a client) to test or demo is difficult if they do not have the required hardware.

Thoughts on Aframe (WebVR)

Throughout my review of both the research and commercial literature around multi-user VR content there were some gaps that quickly made themselves apparent – accessibility across many devices being the largest one. Specifically a question of “How do we support more users than just those comfortable in VR/AR HMD’s due to either usability or cost issues?” It was around this time that I stumbled across WebVR/WebXR, and found Aframe.

I had not touched Javascript for a while, much preferring strongly typed languages like C++ for many of my interactive art and research projects – using libraries like OpenFrameworks and Cinder Frameworks. I found javascript to be a mess honestly – and very difficult to get the performance, scoping, and the run-time errors to better diagnose problems I wanted. Fortunately I have found tools like Browersify, and modern javascript/EMCAscript6 so much better than the javascript of old (love const and let types!!) and so I started to learn node.js, socket.io, and Aframe to recreate what I had done in Unity a few months ago.

https://twitter.com/PlumCantaloupe/status/961677168316006400

- Aframe/WebVR Pros

- speed of development

- very friendly and helpful community (Slack, Stackoverflow etc.)

- open-source!! Can view how code works behind the scenes, tinker, borrow, fix etc.

- Can easily create VR content for all devices / platforms

- Easy to pass onto someone else (without requisite hardware) to get something passably running

- Responsive VR/AR design is going to be huge (IMO) and WebVR is the only platform I have come across that is natively supporting this.

- Can easily access Three.js underneath (if using Aframe)

- Lots of great components available for speeding up developments such as Aframe-extras from Don McCurdy and Networked-Aframe from Hayden Lee

- Ability to quickly iterate and develop other views. I don’t have to develop/compile/distribute a new app to create some interesting 2D view of a Virtual Environment (e.g. an exocentric top-down view of all users in a VR space). I just create a new web page!

- Aframe/WebVR Cons

- performance is not as great as native but Firefox Nightly and Chrome Canary, has been making huge strides and I have heard Oculus’s browser is also very good.

- browser support is very hit and miss (especially on non-windows platforms). To be expected as WebVR has not been standardized yet.

- can be difficult to find add-ons that are stable enough for use

- All feels very experimental and so frightening to think about using it for production quality work and research

- Have to wrestle with asynchronicity issues that you generally don’t run into with Unity (e.g. why is this model loading BEFORE something else it is dependent on …)

- Not very clear what are best practices for extending functionality in Aframe (do I create another component? Do I copy and paste someone else’s code? Especially as I cannot control the order of component loading that I am aware of)

- Farther from the “metal” so harder to bring in things it was not meant to do (i.e. bring in a non-web library such as Kinect Tracking)

Summary

Overall I am very happy with Aframe and WebVR. I love playing around with new technologies, but just being able to test one-many users on any one machine by just opening up another instance of a browser (or just another tab) is extremely powerful and so very organic. I truly feel this is the future of VR. VR and AR devices will undoubtedly melt into a single device, and the content should respond to the type of the device it is being displayed on currently. Just look towards how powerful responsive web design for 2D content across our multitude of “smart” devices.

We cannot all have access to expensive immersive VR HMDs, nor do we always want to access the worlds with them due to the convenience of just opening up the browser on your phone. WebVR/WebXR is still very much a work in progress but there are many talented people working on it – including teams from Mozilla, Google, and Supermedium. I am going to keep working in this space as it serves my needs for a multi-device multi-user platform; but anyone hesitant about jumping is please do – it is a powerful glimpse of the future; and a way for us all to easily build VR content.

In closing these would be some of my hopes for Aframe and WebVR/WebXR of the future at least a few of them I can think currently). I hope that others will also share their experiences!

- Browsers to support embedding web pages into VR content – I want to be able to open a webpage in VR.

- A standardized way to extend upon component functionality in Aframe

- A way to control loading order of components in Aframe (update: it has been pointed to a dependency functionality in the components I will have to research further).

- We perhaps don’t want a centralized “OASIS’ for multi-user content but perhaps we do want a standardized way to keep persistent avatar customizations across the many web pages (perhaps avatar customization within the browser itself?).

- Would like a more featured WebGL context (why not geometry shaders?)

Thanks again to all those contributing to WebVR in the browsers, WebGL, and the libraries on top. The web opens up many possibilities.